Clustering Analysis: Difference between revisions

(404307) |

No edit summary |

||

| (28 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

Clustering Analysis can be used to explain what kind of cases the eventlog contains. It divides cases into groups in a way that cases within each group are as similar as possible in terms of the case attribute values and occurred event types. Clustering is based on a unsupervised machine learning and uses the ''kmodes'' algorithm with categorized values for case attribute values and event type occurrences. Due to the nature of the algorithm, different clustering runs may end up to different results. Also, the clustering is usually not performed for all cases but a sample of cases (to improve performance) because when the sample is representative, it would provide as good results as with the entire dataset. See this [https://en.wikipedia.org/wiki/Cluster_analysis Wikipedia article] for more about the idea behind clustering. | |||

Clustering analysis is an easy way to understand and explain the eventlog without knowing anything about it beforehand. It can also be used to check data integrity, as the analysis might reveal that the eventlog actually contains data from two distinct processes that cannot actually be compared to each other. | |||

[[File:Clusteringanalysis.png|900px]] | |||

== | == Using Clustering Analysis == | ||

Clustering analysis is available as a view in the [[Navigation_Menu#Clustering_Analysis|Navigation menu]]. Also when creating a custom dashboard, the clustering can be opened as a preset to get it to the dashboard. The dashboard will remember the clustering settings if they are changed. | |||

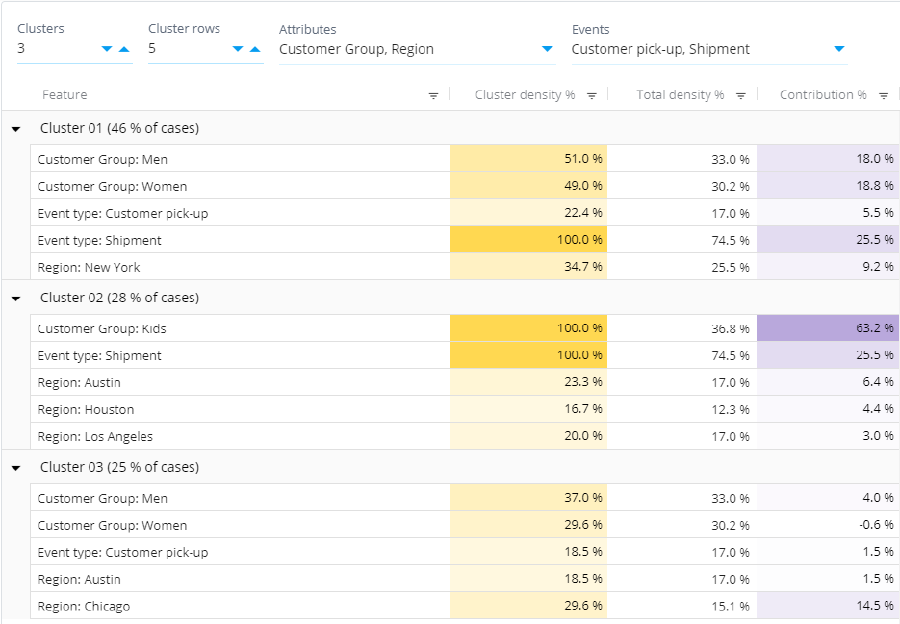

Clustering analysis is shown in a table where rows are grouped in a way that each group is a cluster and each row shows describing features in the cluster. There are the following columns: | |||

[[ | * '''Feature''': The describing feature of the cluster, i.e., the case attribute and its value, or the occurred event type name. | ||

* '''Cluster density %''': Share of cases having this feature within the cluster, i.e. the number of cases having the value shown on the row in this particular cluster divided by the number of cases in the cluster * 100. | |||

* '''Total density %''': Share of cases having this feature in the entire eventlog, i.e., the total number of cases having the value shown on the row divided by the total number of cases * 100. | |||

* '''Contribution %''': Explains how much more common this feature is in this cluster when comparing to the entire eventlog. The higher the value, the more the feature characterizes this particular cluster. The contribution percentage is calculated as a subtraction of the cluster density and the total density percentages. | |||

Note that in some special datasets, there may be less clusters returned than requested. This occurs for example when there are less cases than clusters requested, or the features of the cases don't have unique enough values. | |||

== Clustering Analysis Settings == | |||

Clustering analysis has the following settings: | |||

* '''Clusters''': Number of clusters which the cases are divided into. | |||

* '''Cluster rows''': Number of describing features shown for each cluster. The features are shown in the order of strongest contribution. | |||

* '''Attributes''': Case attributes that are taken to the clustering analysis. If none is selected, all case attributes are selected. You can restrict which case attributes are selected, if you want the clustering to be done based on only certain features. | |||

* '''Events''': Event types that occurrences are taken to the clustering analysis. If none is selected, all event types are selected. | |||

Also the size of the sample used in the clustering can be changed in the chart settings (in the ''Analyze'' tab). | |||

== Clustering Analysis Calculation== | |||

Calculating the clustering analysis is performed as follows: | |||

# Taking a random sample of cases from the entire eventlog. | |||

# Performing the clustering to the cases using the K-means algorithm. As a result, each case belongs to a certain cluster. | |||

# [[Root_Causes|Root causes analysis]] is run to find the characterizing features for each clusters. | |||

The root causes analysis is used so that the clustering analysis wouldn't need show the individual cases in each cluster, but the features that describe each cluster (a long list of individual cases wouldn't be very easy to read). Note that the case attribute and event type settings are used both in determining which features are taken to the clustering phase and also for which features the root causes analysis is run. | |||

Technically, the feature data used by the clustering analysis are numeric values between 0 and 1. Case attribute values are converted into that format as follows: | |||

* Numbers and dates are scaled between 0 and 1 (minimumum value is converted to 0 and maximum to 1) | |||

* Textual columns are "one-hot" encoded into multiple columns in a way that each unique value gets an own column. For each unique value column, when value is 1, the case has that particular attribute value and when value is 0, the attribute value is something else. | |||

Latest revision as of 22:55, 1 September 2023

Clustering Analysis can be used to explain what kind of cases the eventlog contains. It divides cases into groups in a way that cases within each group are as similar as possible in terms of the case attribute values and occurred event types. Clustering is based on a unsupervised machine learning and uses the kmodes algorithm with categorized values for case attribute values and event type occurrences. Due to the nature of the algorithm, different clustering runs may end up to different results. Also, the clustering is usually not performed for all cases but a sample of cases (to improve performance) because when the sample is representative, it would provide as good results as with the entire dataset. See this Wikipedia article for more about the idea behind clustering.

Clustering analysis is an easy way to understand and explain the eventlog without knowing anything about it beforehand. It can also be used to check data integrity, as the analysis might reveal that the eventlog actually contains data from two distinct processes that cannot actually be compared to each other.

Using Clustering Analysis

Clustering analysis is available as a view in the Navigation menu. Also when creating a custom dashboard, the clustering can be opened as a preset to get it to the dashboard. The dashboard will remember the clustering settings if they are changed.

Clustering analysis is shown in a table where rows are grouped in a way that each group is a cluster and each row shows describing features in the cluster. There are the following columns:

- Feature: The describing feature of the cluster, i.e., the case attribute and its value, or the occurred event type name.

- Cluster density %: Share of cases having this feature within the cluster, i.e. the number of cases having the value shown on the row in this particular cluster divided by the number of cases in the cluster * 100.

- Total density %: Share of cases having this feature in the entire eventlog, i.e., the total number of cases having the value shown on the row divided by the total number of cases * 100.

- Contribution %: Explains how much more common this feature is in this cluster when comparing to the entire eventlog. The higher the value, the more the feature characterizes this particular cluster. The contribution percentage is calculated as a subtraction of the cluster density and the total density percentages.

Note that in some special datasets, there may be less clusters returned than requested. This occurs for example when there are less cases than clusters requested, or the features of the cases don't have unique enough values.

Clustering Analysis Settings

Clustering analysis has the following settings:

- Clusters: Number of clusters which the cases are divided into.

- Cluster rows: Number of describing features shown for each cluster. The features are shown in the order of strongest contribution.

- Attributes: Case attributes that are taken to the clustering analysis. If none is selected, all case attributes are selected. You can restrict which case attributes are selected, if you want the clustering to be done based on only certain features.

- Events: Event types that occurrences are taken to the clustering analysis. If none is selected, all event types are selected.

Also the size of the sample used in the clustering can be changed in the chart settings (in the Analyze tab).

Clustering Analysis Calculation

Calculating the clustering analysis is performed as follows:

- Taking a random sample of cases from the entire eventlog.

- Performing the clustering to the cases using the K-means algorithm. As a result, each case belongs to a certain cluster.

- Root causes analysis is run to find the characterizing features for each clusters.

The root causes analysis is used so that the clustering analysis wouldn't need show the individual cases in each cluster, but the features that describe each cluster (a long list of individual cases wouldn't be very easy to read). Note that the case attribute and event type settings are used both in determining which features are taken to the clustering phase and also for which features the root causes analysis is run.

Technically, the feature data used by the clustering analysis are numeric values between 0 and 1. Case attribute values are converted into that format as follows:

- Numbers and dates are scaled between 0 and 1 (minimumum value is converted to 0 and maximum to 1)

- Textual columns are "one-hot" encoded into multiple columns in a way that each unique value gets an own column. For each unique value column, when value is 1, the case has that particular attribute value and when value is 0, the attribute value is something else.